Part 2: Generative AI – Market Research Angel or Devil? How to ensure you win in this new era

By Adam Mertz, Chief Growth Officer at Discuss

In part 1 of this blog series, I focused on a lot of the promise for the potential of generative AI and highlighted the three major areas of research that are most likely to be impacted by generative AI over the course of the next 1-2 years. Also, since that first post, I was able to present at the 2023 TMRE conference with Sai Pisapati from Reckitt (link to the session is here if you are interested) where we highlighted this promise of Gen AI in action at Reckitt. To recap from that first post in the series, though, the 3 areas with significant potential to be positively impacted are shown below.

1. Setting up research, which can include:

- Developing personas

- Identifying segments

- For qual research – creating screeners as well as pre-work follow-ups

2. Collecting feedback, such as:

- For quant research – creating survey questions

- For qual research – creating discussion guides and async questions, and moderation suggestions

3. Analyzing responses, including:

- Summarizing responses across audience segments

- Highlighting project-level themes and quotes

- For qual research – suggesting or auto-creating highlight reels

In this post, though, I promised I would spend time discussing the critical topic related to generative AI and market research –

Security-related questions and focus areas in using generative AI to ensure compliance to global privacy regulations as well as your own information security team’s guidelines for ensuring respondent and organizational data remains secure and private

Security

When it comes to generative AI, security is consistently the first question that is on everybody’s mind. And it should be because in market research there’s a lot of important, and private, information that needs to be protected. If market researchers are going to embrace gen AI then they need to first make sure that whatever they implement in terms of using gen AI, and whatever vendor they are working with on this front, it keeps both respondent information and company information safe and secure.

Since the most common means of leveraging gen AI in market research will be from working and partnering with technology vendors who have incorporated it into their applications, here are some of the key questions and due diligence performed around safety and security:

Will your data be used to inform any large language learning models?

Obviously, you want to benefit from these models, but that doesn’t mean that you necessarily want to allow your data to be used to contribute to them. Be sure to ask this question and think hard before you allow your organization’s data, along with respondent data, that is likely part of a research project not meant to be seen by any public audiences to be used in further building out a learning model that you don’t own.

Is the connection that’s being used to leverage the gen AI inputs, analysis, etc. private and secure?

This question pertains to whether a company has just simply provided a window in their app into ChatGPT, for instance, or whether a secure API is being leveraged that ensures all data being passed is being done so securely. In most cases, you will want to make sure that a secure API is being leveraged, while there may be some exceptions when that is not needed.

What happens to your data after it’s fed into the generative AI engine and processed?

You want to make sure that it is clearly documented that after the engine uses your inputs all data is deleted from within the engine. Again, your organization can and probably should maintain the data for some period of time, but you want to be the holder of that data vs. allowing the generative AI engine to hold that data.

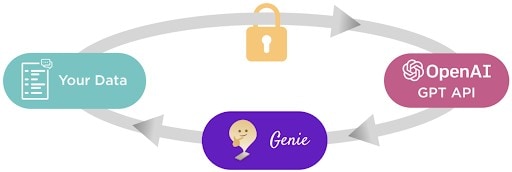

These are all important questions to be asking vendors, and to give you an idea of how Discuss handles the data exchange for our generative AI suite, Genie, I’ll briefly highlight the key pieces on the security front and how we’re handling them. First, we have a signed agreement with OpenAI (the makers of ChatGPT), to leverage their secure and private GOT API connection. The engine takes the input, provides an output back to our app, and then promptly deletes that data. Again, this process and the deletion of data is part of our contractual agreement with OpenAI. And last, as part of that agreement, any data that’s sent to the engine to process is not shared or accessible to any entity, with no outside data is brought in and none of OpenAI learning models being informed/using that data in any way. The picture below is a visual representation of this closed-loop system.

In summary, as leading market researchers race to embrace generative AI the ones who will win are the ones who not only focus on leveraging it to maximize their research ROI, but also ensure that they are minimizing risk for their organization throughout the process. The good news is that with the right approach, and partner, the risk can be easily eliminated.

In my next post in this series I will spend time discussing the shift in focus that generative AI will likely drive between quantitative and qualitative research, and the reasons behind that shift.

Download our Data Privacy & Security Quick Guide

Discover how we prioritize GenAI data privacy and security. We employ a closed-loop system, ensuring your data remains confidential and is never utilized to train AI models. Through encrypted communication channels and strict compliance measures, we guarantee adherence to data protection regulations like GDPR. With a Zero-Data Retention policy and purpose-limited usage, Discuss ensures your information is safeguarded at every step. Join us in driving innovation for next-gen insights while safeguarding your data integrity.

About Adam Mertz –

Adam is responsible for leading the innovation, go-to-market, and growth strategies for Discuss. His past 2+ years with Discuss have been focused on driving a culture of obsessing over customer challenges in market research, and innovation that enables customers to rethink what’s possible in scaling qual research. Adam frequently speaks on the new era market research is now in. Recently, he has moderated a thought leader panel on the use of generative AI in in market research, How to Change the Game With Generative AI in Market Research, which featured Rowan Curran, Forrester analyst focused on generative AI, Robin Lindberg, Principal at Quadrant Strategies, Liz White, SVP of Research and Strategy at Buzzback; as well as presenting at TMRE’s 2024 annual conference with Sai Pisipati, Consumer Insights and Analytics Manager at Reckitt, Scaling Global Empathy – What’s Worked and How Gen AI Changes the Game.

Ready to unlock human-centric market insights?

Related Articles

How AI Agents are Redefining the Future of Market Insights

The world of artificial intelligence is moving at lightning speed, and the concept of AI agents has brought about the…

Part 3: Generative AI – Market Research Angel or Devil? How to ensure you win in this new era

By Adam Mertz, Chief Growth Officer at Discuss In part one of this blog series I spent time reviewing the…

Generative AI – Market Research Angel or Devil? How to ensure you win in this new era

There are many questions about this new world: This post is the first in a series focused on delving into…